Business

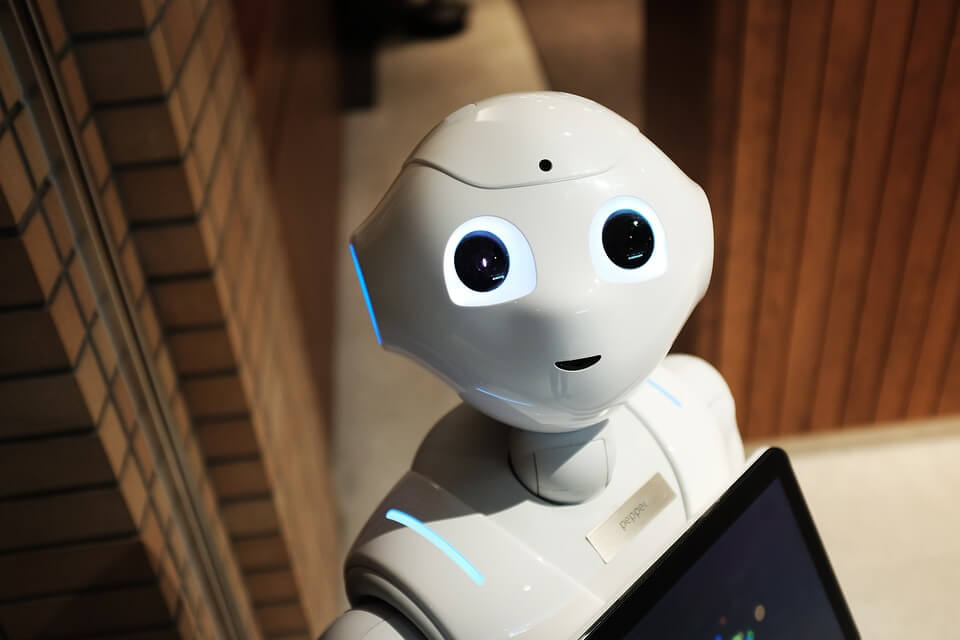

How companies can use artificial intelligence responsibly in 2018

Artificial intelligence is the future of many industries but it does not come without challenges.

Artificial intelligence is coming, whether we like it or not, and it will be fueled by big data and machine learning. More importantly, it will have access to an endless supply of data and information about everyone.

This technology brings several questions and concerns to mind, chiefly with how to deploy it in a responsible, well-intentioned manner.

As paranoid as it may seem, the biggest question is whether we can trust this technology to do what we want it to. That may seem silly, considering we have full control and we decide how it works and how it approaches decisions and conflict.

But true AI, the kind we’re striving to create — and will soon be able to deploy — will be autonomous and forward-thinking. It’s not just a scenario ripped straight out of the biggest Hollywood blockbuster movies. Several influential people, including Elon Musk and a long list of industry experts, believe it’s a potentially damaging issue.

There is a host of other concerns too, for instance:

• What risks and trends will influence labor displacement?

• Unprecedented levels of protection and privacy will be necessary, including personal data and persona or personality-based info.

• Knowledge, training, and education will be required to school, not just industry professionals, but the public, as well.

• What legal policies and regulations will be necessary going forward? For instance, will laws need to be updated to include AI, neural networks, and modern robotics?

• How will this affect international and global economies?

• How do we decide what is the ethical and fair treatment of these systems, and what is not?

• What limitations do we set on how and where to leverage AI?

Furthermore, who is to blame when one of these systems or platforms malfunction? Self-driving vehicles, for example, are supposedly safe and reliable—safer than human control. The semantics of this doesn’t matter, at least not yet. What does matter, however, is under any circumstances that do arise, how do we decide if AI is the culprit, and what do we do about it?

A whopping 20 percent of companies will dedicate and invest resources to oversee neural networks—an advanced form of AI and machine learning—by 2020. The revolution is coming, and it’s approaching faster than we realize.

Responsibility comes early

For a new parent, responsibility comes as soon as the child enters this world. You may not know exactly what to do, how to approach a situation or what decision to make, but make no mistake, the responsibility is still there.

The same can be said of artificial intelligence, machine learning, neural networks and similar technologies. These systems are already in place, and people are using them every day. No, the functionality might not be as advanced as we’d like, but it is improving. That’s hardly the point, though.

The responsibility has already fallen to us to govern and hash out appropriate use of these technologies. If we wait until tomorrow to concern ourselves with these concepts, it will be too late, which is exactly the point Elon Musk and fellow industry experts are trying to explain. The time to be responsible and act is now.

With something so widespread, the responsibility becomes a collective effort. No single official, government, country or individual is responsible in full. We all share the duties and burdens.

The problem with such rapidly advancing technologies is that it’s difficult for legislation, business ethics, and core values to keep pace. The innovation and change happen too fast. It is good news that many tech companies have pledged to use the platforms and systems responsibly, but who will police them? Who steps up to tell a party or business when they step out of line? Again, the responsibility falls to us, the world at large.

We are seeing this now with self-driving vehicles. Many thought the technology wouldn’t be on our roadways and ready for a widespread rollout until far into the future. Now, as a society, we’re having to answer ethical and concerning questions about its use.

More importantly, the government and related agencies are finding it hard to keep up. Many states still have yet to pass a law or bill related to the technology. The House of Representatives is handling important regulations, but not nearly fast enough.

Aside from AI and neural networks, modern robotics also require regulations and legal policies. (Source)

How can we make this happen?

If the burden falls on business owners, investors, entrepreneurs, and consumers, how do we facilitate it? What are some things we can do to honor and appropriately follow our responsibilities?

• We must support the modern government, and help them establish a framework that allows AI to thrive, yet remain responsible, ethical and harmless.

• We must come up with core principles to preserve security, privacy, trust and transparency for any and all involved with these technologies.

• We must establish an agency that can monitor, audit and take action for infringements on these values.

• Democratize the adoption of these systems in various industries and their related use. Are there, for instance, some places where AI should never go?

• Deploy wide-scale education and training programs to ensure everyone understands the technologies in use.

• Support systems and alternate work opportunities for uprooted or disrupted labor forces.

• Establish sound, trustworthy and efficient cyber-security practices and policies, especially those that protect and secure AI systems and robotics. Thirty-eight percent of jobs in the U.S. will likely be vulnerable to AI systems by the early 2030s.

• Properly integrate human intelligence, skill, and experience with machine learning and related systems. If and when AI is taking over, ensure it can operate safely in the absence of human oversight.

• Have open discussions about the future of the technology through conferences, meet-ups, and expos where top parties and experts share thoughts and advice on the current state of the industry.

• Establish a kill switch and rules of engagement to shut down, roll back or disable automated systems, including when and why it’s necessary to do so.

Of course, these ideas are just a baseline for how we should approach the future of the industry and related technologies. What thoughts do you have, if any, that were not addressed here?

—

DISCLAIMER: This article expresses my own ideas and opinions. Any information I have shared are from sources that I believe to be reliable and accurate. I did not receive any financial compensation in writing this post, nor do I own any shares in any company I’ve mentioned. I encourage any reader to do their own diligent research first before making any investment decisions.

-

Cannabis1 week ago

Cannabis1 week agoCannabis and the Aging Brain: New Research Challenges Old Assumptions

-

Africa2 weeks ago

Africa2 weeks agoUnemployment in Moroco Falls in 2025, but Underemployment and Youth Joblessness Rise

-

Crowdfunding6 days ago

Crowdfunding6 days agoAWOL Vision’s Aetherion Projectors Raise Millions on Kickstarter

-

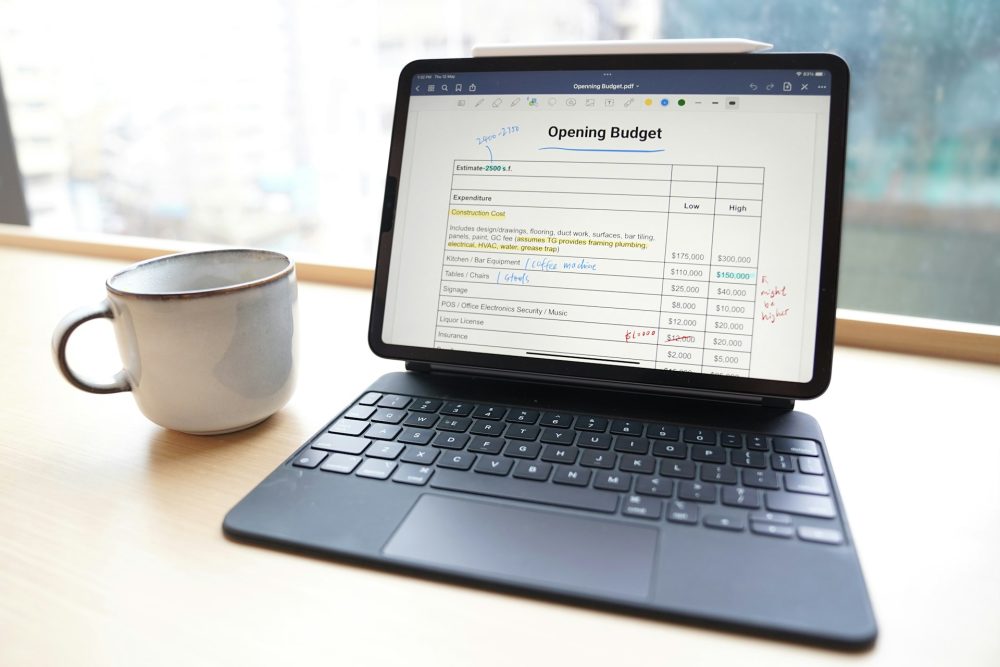

Fintech2 weeks ago

Fintech2 weeks agoFintower Secures €1.5M Seed Funding to Transform Financial Planning

You must be logged in to post a comment Login